Databricks to Aurora Sync Accelerator

Enterprise-Grade Data Synchronization in 2-3 Weeks

Sync 500+ Delta Lake tables to MySQL Aurora with exactly-once delivery guarantees, automatic failover, and comprehensive observability. Production-ready solution with 37+ documentation files and battle-tested resilience patterns. Deploy in 2-3 weeks instead of 4-6 months.

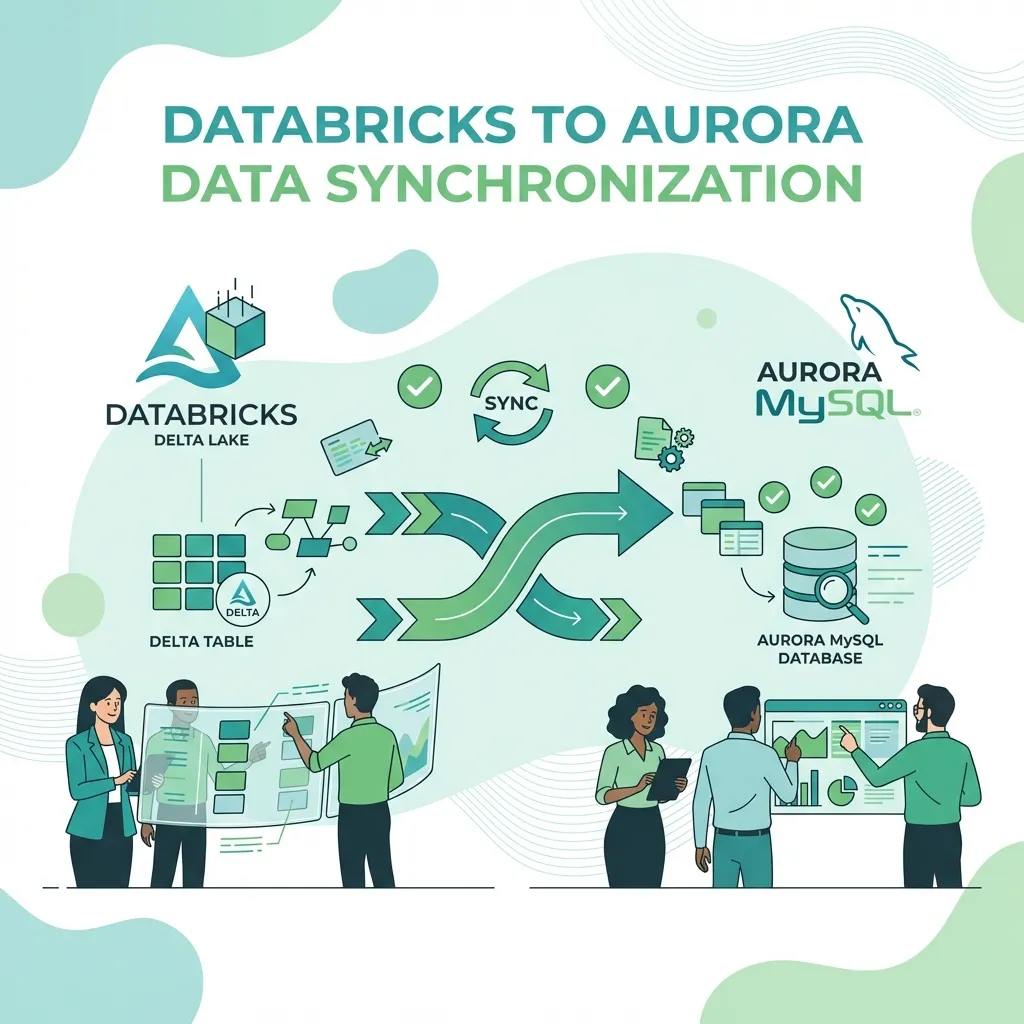

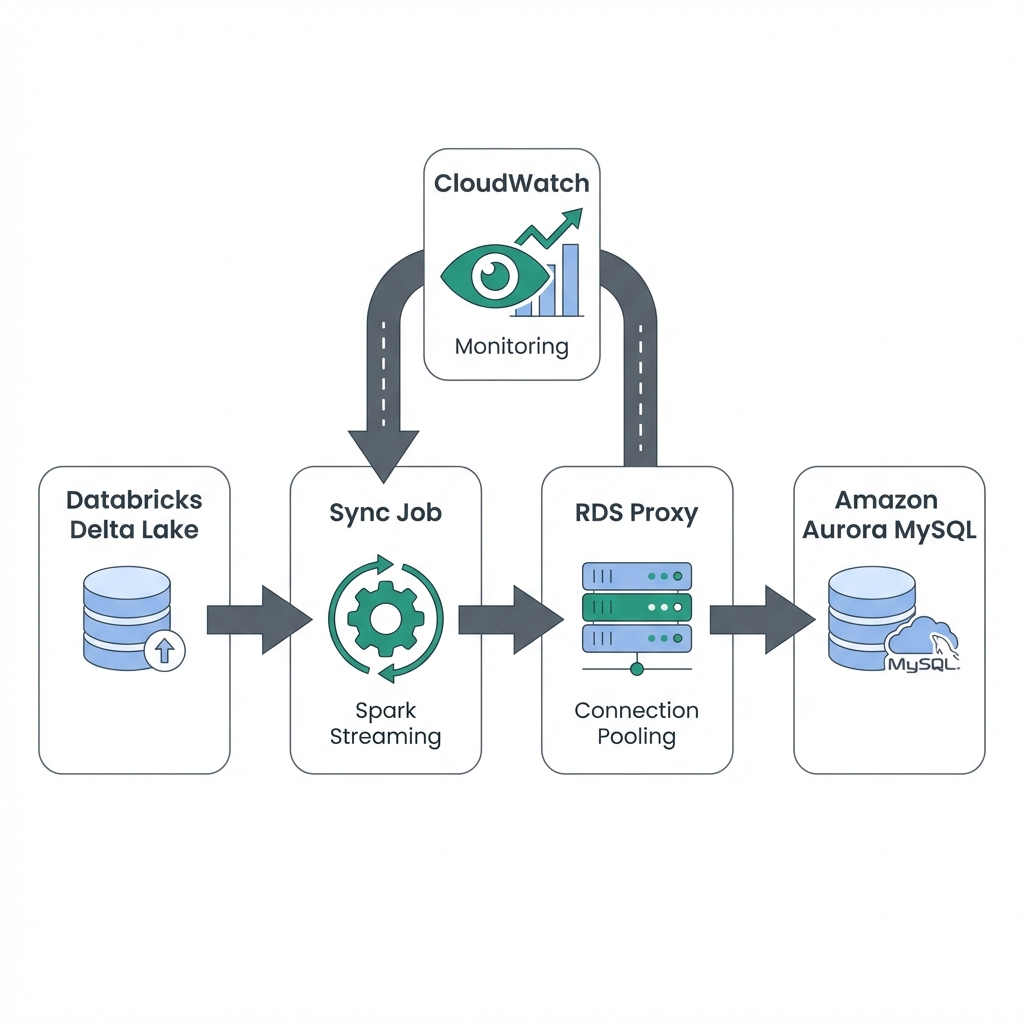

Architecture & Workflows

Our accelerator uses a resilient, event-driven architecture to ensure exactly-once delivery from Databricks to Aurora MySQL.

Sync 500+ Delta Lake tables to MySQL Aurora with exactly-once delivery guarantees, automatic failover, and comprehensive observability. Production-ready solution with 37+ documentation files and battle-tested resilience patterns. Deploy in 2-3 weeks instead of 4-6 months.

Solution Highlights

Enterprise Scale

Support for 500+ tables with configurable sync frequency. Handles millions of rows per day with automated batch grouping for optimal performance.

- ✓ Scale to 500+ concurrent tables

- ✓ Configurable sync frequency (min 1 min)

- ✓ Automated batch grouping logic

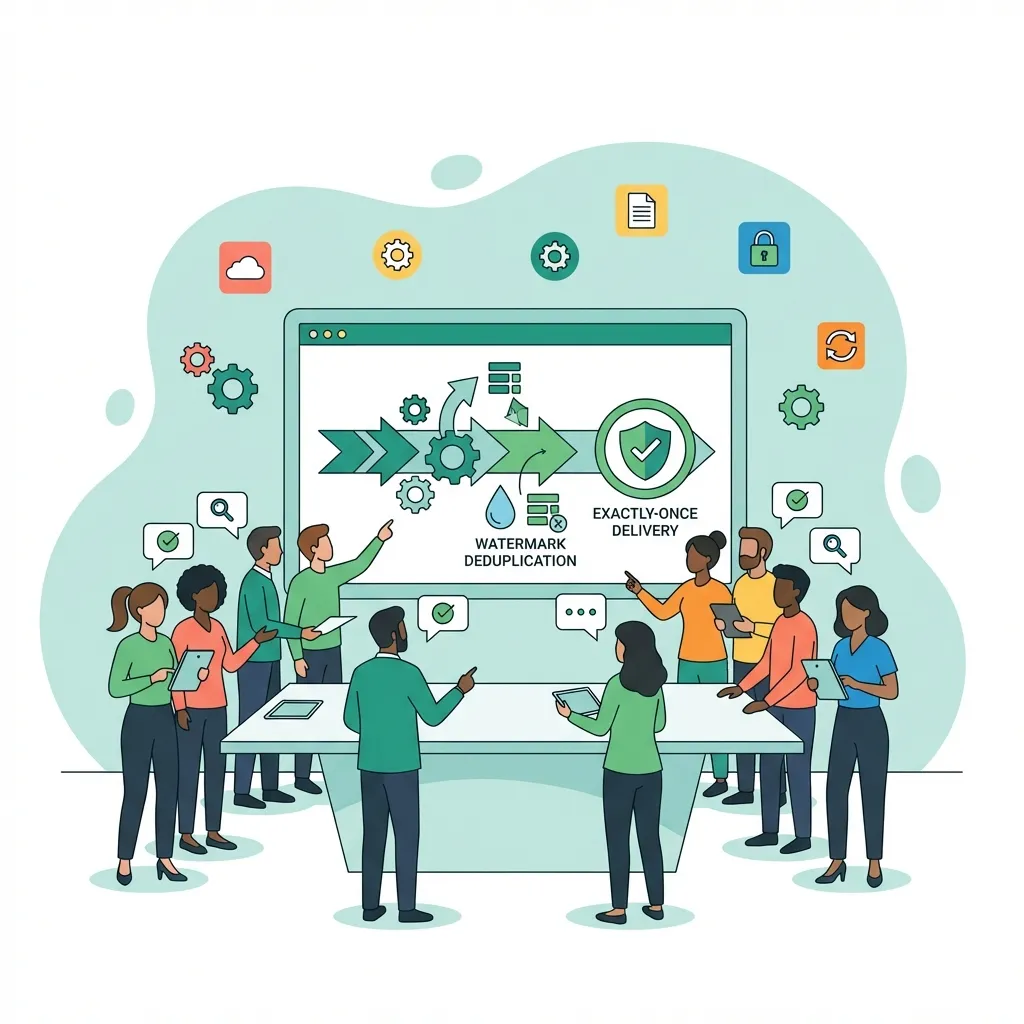

Exactly-Once Delivery

Watermark-based deduplication ensures no duplicates or missed records. Two-phase commit with per-batch recovery for crash resilience.

- ✓ Watermark-based deduplication

- ✓ Two-phase commit transactions

- ✓ Idempotent writes to Aurora

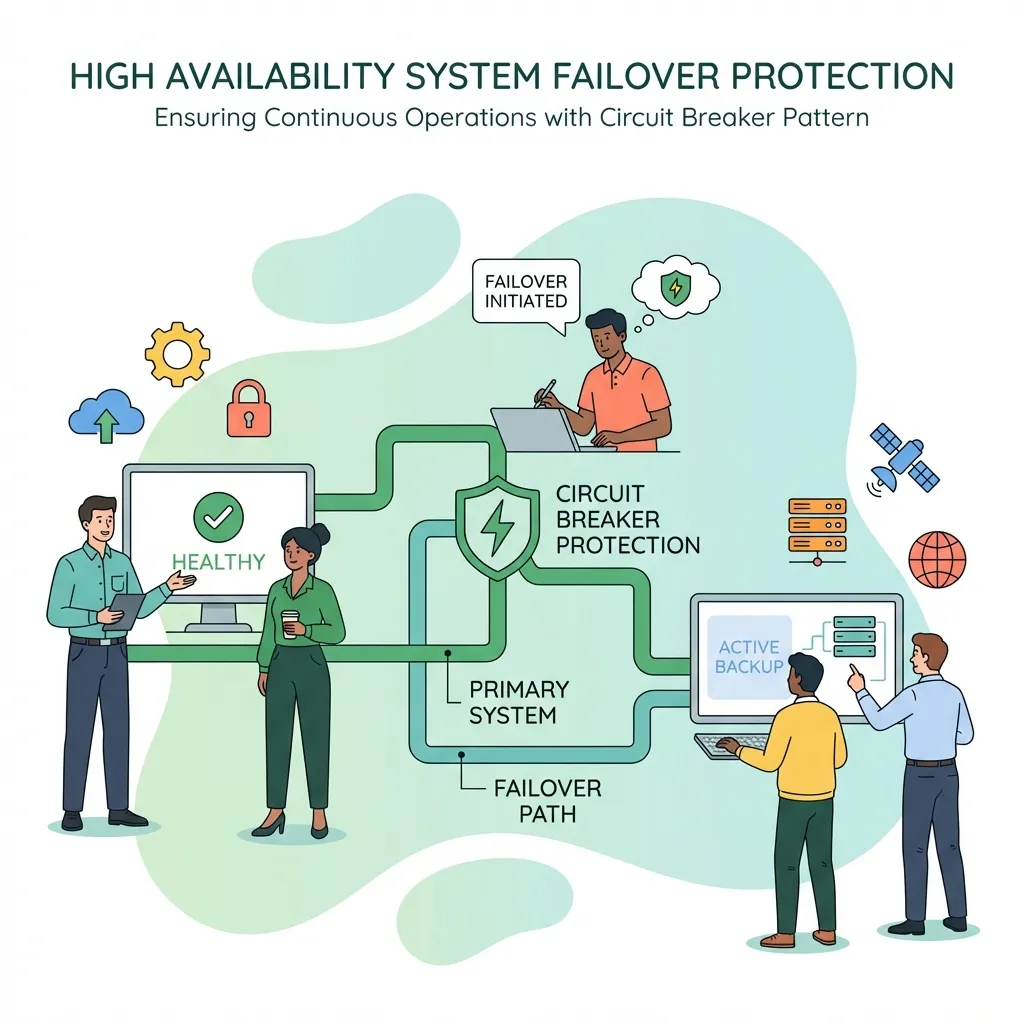

Automatic Failover

RDS Proxy integration provides zero-downtime during Aurora maintenance. Circuit breaker patterns prevent cascade failures.

- ✓ Zero-downtime maintenance handling

- ✓ Circuit breaker pattern implementation

- ✓ Automatic retry with exponential backoff

Job Checkpointing

Crash recovery from last checkpoint, not from the beginning. Job-level checkpointing enables incremental recovery and resume capabilities.

- ✓ Stateful streaming checkpoints

- ✓ Incremental recovery

- ✓ No full re-sync required on restart

Comprehensive Monitoring

Full observability with SLA tracking, cost monitoring, and alerting. Proactive CDF expiry monitoring prevents unexpected full resyncs.

- ✓ Real-time SLA tracking dashboards

- ✓ Detailed cost monitoring

- ✓ Proactive CDF expiry alerts

Enterprise Security

RBAC, IAM integration, secrets management, and compliance-ready. Credential sanitization in logs and audit trails for all operations.

- ✓ Secrets Management integration

- ✓ Sanitized audit logs

- ✓ IAM role-based access

Complete Documentation

37+ comprehensive documentation files including runbooks, DR procedures, performance tuning guides, and operator quick start.

- ✓ Runbooks & DR Procedures

- ✓ Performance Tuning Guides

- ✓ Operator Quick Start Playbooks

Fast Deployment

Production-ready in 2-3 weeks vs. 4-6 months for custom development. Includes infrastructure as code and automated setup scripts.

- ✓ Infrastructure as Code (Terraform)

- ✓ Automated setup scripts

- ✓ Production-ready in < 3 weeks

Technical Specifications

Scale & Performance

- Tables: 500+ tables supported

- Sync Frequency: 15-minute incremental (configurable)

- Throughput: 10,000+ rows/second per table

- Latency: < 1 second RPO (Recovery Point Objective)

Reliability

- Delivery Guarantee: Exactly-once semantics

- Failover: Automatic via RDS Proxy

- Recovery: Job-level checkpointing

- RTO: < 30 minutes (Recovery Time Objective)

Observability

- Metrics: Comprehensive Databricks and CloudWatch metrics

- Dashboards: Cost, audit, and access monitoring

- Alerting: SLA violations, CDF expiry, cost overruns

- Logging: Sanitized logs with credential protection

Compliance & Security

- Standards: SOC 2, GDPR, HIPAA ready

- Security: RBAC, IAM, secrets management

- Audit: Complete audit trails and lineage

- Data Protection: PII detection and classification

What's Included

Implementation

Complete Implementation

- Production-ready Python codebase (fully tested)

- Databricks notebooks and workflows

- Terraform infrastructure as code

- Unit and integration tests

- CI/CD pipeline configuration

- Security and compliance configurations

Documentation

Comprehensive Documentation (37 documents)

- Executive Summary with cost analysis and timeline

- Architecture & Design with detailed diagrams

- Operations Guide with monitoring and troubleshooting

- Security & Compliance framework

- Disaster Recovery procedures and runbooks

- API Reference and Performance Tuning Guide

- Operator Quick Start and Common Scenarios Playbook

- Maintenance Plan and SLA Escalation Procedures

Monitoring

Monitoring & Observability

- Cost monitoring dashboards (Databricks SQL)

- Audit and access dashboards

- SLA tracking and alerting

- CDF expiry monitoring (proactive alerts)

- CloudWatch integration

- Custom metrics and logging

Support

Support & Maintenance

- Initial deployment support (2-3 weeks)

- Knowledge transfer sessions

- 30-day post-deployment support

- Optional ongoing maintenance (annual subscription)

- Updates and enhancements

- Priority support channel

Use Cases

Analytics to Operations

Sync Databricks analytics data to Aurora for operational applications. Enable real-time decision-making with fresh data in MySQL-based systems.

Data Warehouse Sync

Replicate Delta Lake tables to MySQL for reporting and BI tools. Provide consistent data across analytics platforms with 15-minute freshness.

Multi-Region Replication

Sync data across regions for disaster recovery. Maintain data consistency with automatic failover capabilities and < 30 minute RTO.

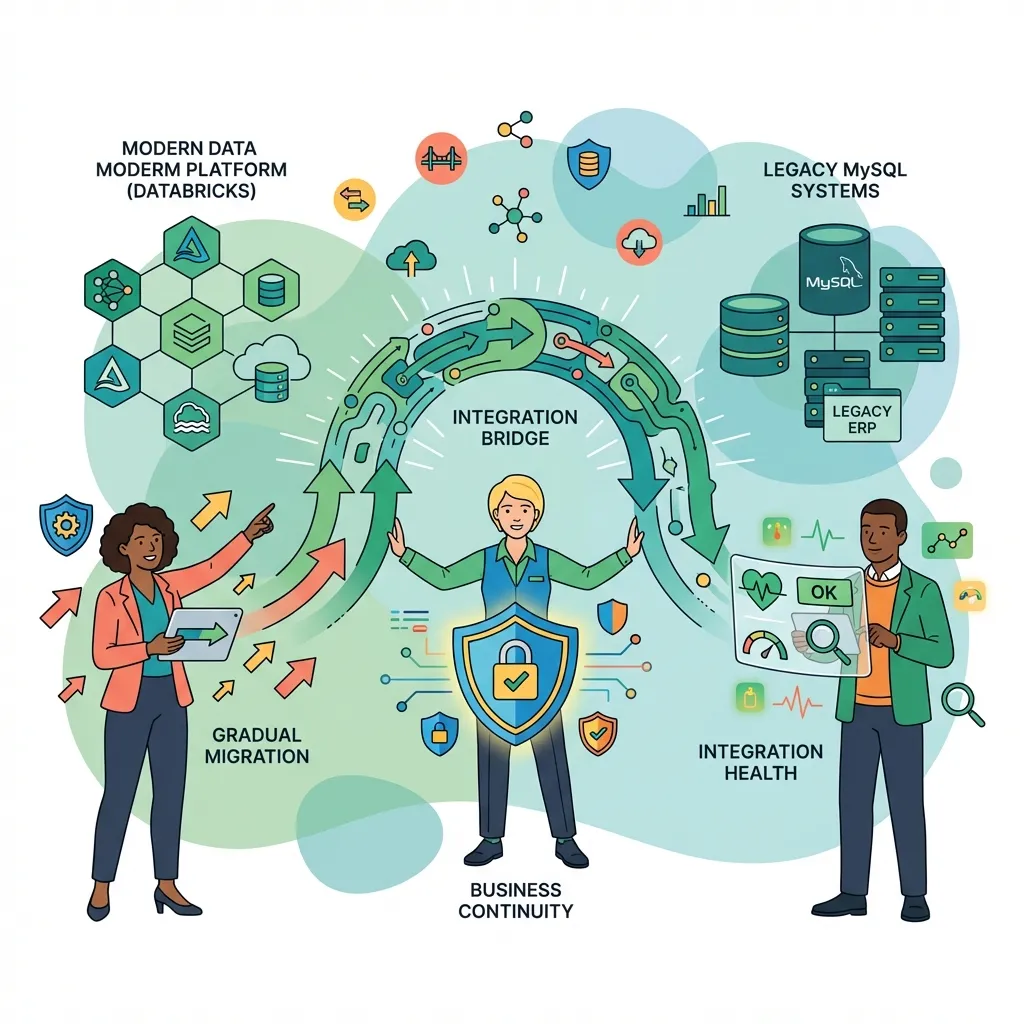

Legacy System Integration

Bridge modern data platforms with legacy MySQL systems. Enable gradual migration without disrupting operations or business continuity.

Get Started with Databricks Aurora Sync

See how Databricks Aurora Sync can transform your data infrastructure. Or contact us directly: office@dhristhi.com

Ready to take your business to the next level?

Contact Us

Feel free to use the form or drop us an email.